| Version 47 (modified by , 3 years ago) ( diff ) |

|---|

Neural Networks For Feature Analysis

Introductions

Mayank Barad

Rising Senior in Computer Engineering and Computer Science

Daksh Khetarpaul

Rising Junior in Computer Engineering

Katherine Lew

Rising Sophomore in Finance and Computer Scienc

Advisors - Dr Richard Howard, Dr Richard Martin

Project Description

Neural networks have a long history of being used for classification, and more recently content generation, Example classifiers including, image classification between dogs and cats, text sentiment classification. Example generative networks include those for human faces, images, and text. Rather than classification or generation, this work explores using networks for feature analysis. Intuitively, features are the high level patterns that distinguish data, such as text and images, into different classes. Our goal is to explore bee motion datasets to qualitatively measure the ease or difficulty of reverse-engineering the features found by the neural networks.

Week 1

- Understanding the purpose of the project

- Setting up Github and iLab accounts

- Getting familiar with Neural Networks

Week 2

- Visited the beehive to observe the behavior of real bees

- Made a prototype simulator with pygame - Rejected(pretty obvious reasons)

- Integrated "Power Law" for a more natural bee motion

First Prototype →

First Prototype → Applying "Power Law" →

Week 3

- Randomness Function:We programmed a function that allows the user to adjust the degree of randomness of synthetic bee motion along a spectrum. 0.0 represents the "bee" moving in a completely random motion, and 1.0 represents the "bee" moving via a distinct non-random pattern like a clockwise circle.

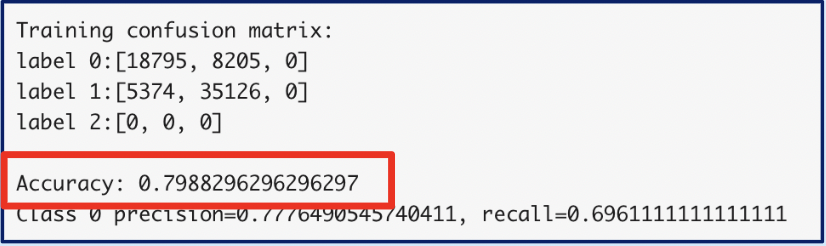

- Train model: We used the randomness function to trained the machine learning model (AlexNet adjacent) to try to detect the difference between the random and non-random behavioral patterns. The model outputted a confusion matrix and an accuracy of 0.798 in identifying randomness.

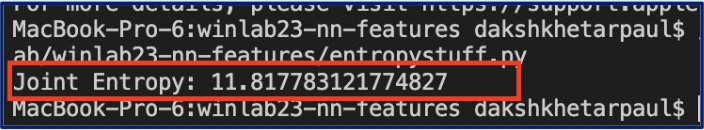

- Shannon's Entropy: We researched Shannon's Entropy Function as a measure of the model's accuracy and created a program that automates the calculation of the joint entropy of two discrete random variables within the random system (e.g angle and distance)

Week 4/5

- Validate results: We discovered that there was a mistake in our training data, so last week's training results were null. There was a bias in the input data, and irrelevant learning happened.

- Retrain model: We retrained the machine learning model using simpler test cases, like the black-white frame test. With simple black and white classes, our model obtained 100% accuracy. With more complicated classes, our model obtained 98% accuracy.

- Reformat tar files: We altered the program to reformat the training data. Instead of combining the frames of the random bee simulator into a video format, we compiled the data into a tar file, which consists of a png, a class, and a metadata file for each frame in the simulation. We will use these tar files as training data for the model.

Week 6

- Time Varying Features: In order to train the model to capture time varying features (and hence motion), we increased the channels while keeping the same kernel size. This works for small movements in the training data.

- Clockwise-Anticlockwise Test: With the time varying features accounted for we began to train the model with patterns of motion, instead of simple black and white frames. For instance, we created training data with one class of frames that move in a clockwise direction and one class of frames that move in a counterclockwise direction. Can the model detect left turns?

- Entropy v. Accuracy Graphs: We created a graph from our model output data to derive the relation between entropy versus accuracy.

Attachments (30)

-

unnamed.jpg

(65.5 KB

) - added by 3 years ago.

bee garage

- jitterbug.mov (155.9 KB ) - added by 3 years ago.

- powerbee.mov (245.4 KB ) - added by 3 years ago.

- powerbee2.mov (194.8 KB ) - added by 3 years ago.

- 0.0.mov (275.2 KB ) - added by 3 years ago.

-

0.1.mov

(275.5 KB

) - added by 3 years ago.

Bee simulator set at 0.1 randomness

-

0.3.mov

(294.6 KB

) - added by 3 years ago.

Simulator set at 0.3 randomness

-

0.9.mov

(291.0 KB

) - added by 3 years ago.

Simulator set at 0.9 randomness

- Screen Shot 2023-07-13 at 2.16.03 PM.png (40.1 KB ) - added by 3 years ago.

-

Screen Shot 2023-07-13 at 2.16.03 PM.2.png

(40.1 KB

) - added by 3 years ago.

Shannon's entropy function

- Screen Shot 2023-07-19 at 10.44.07 AM.png (286.4 KB ) - added by 3 years ago.

- Screen Shot 2023-07-19 at 10.46.33 AM.png (104.1 KB ) - added by 3 years ago.

- Screen Shot 2023-07-19 at 11.40.37 AM.png (19.7 KB ) - added by 3 years ago.

- Screen Shot 2023-07-19 at 11.36.05 AM.png (114.0 KB ) - added by 3 years ago.

- Screen Shot 2023-07-19 at 11.38.38 AM.png (58.9 KB ) - added by 3 years ago.

- Screen Shot 2023-07-19 at 12.23.26 PM.png (42.0 KB ) - added by 3 years ago.

- anticlockwise.gif (2.4 MB ) - added by 3 years ago.

- clockwise.gif (2.7 MB ) - added by 3 years ago.

- yesbias_AdobeExpress.gif (1.1 MB ) - added by 3 years ago.

- nobias_AdobeExpress.gif (818.2 KB ) - added by 3 years ago.

- Screen Shot 2023-08-07 at 3.11.34 PM.png (95.6 KB ) - added by 3 years ago.

- Screen Shot 2023-08-07 at 3.22.19 PM.png (83.0 KB ) - added by 3 years ago.

- 0.0.gif (239.9 KB ) - added by 3 years ago.

- 0.3.gif (146.5 KB ) - added by 3 years ago.

- 0.4.gif (152.2 KB ) - added by 3 years ago.

- 0.9.gif (148.0 KB ) - added by 3 years ago.

- jitterbug2.gif (413.3 KB ) - added by 3 years ago.

- 0.1.gif (152.2 KB ) - added by 3 years ago.

- NN_poster.pptx.png (1004.9 KB ) - added by 3 years ago.

- NN_poster.pptx.pdf (607.2 KB ) - added by 3 years ago.