| Version 51 (modified by , 19 months ago) ( diff ) |

|---|

AI For Behavioral Discovery

Team: Adarsh NarayananUG, Benjamin YuUG, Elias XuHS, Shreyas MusukuHS

Advisors: Dr. Richard Martin and Dr. Richard Howard

Project Description & Goals:

The past 40 years has seen enormous increases in man-made Radio Frequency (RF) radiation. However, the possible small and long term impacts of RF radiation are not well understood. This project seeks to discover if RF exposure impacts animal behaviors. In this experimental paradigm, animals are subject to RF exposure while their behaviors are video recorded. Deep Neural Networks (DNNs) are then tasked to correctly classify if the video contains exposure to RF or not. This uses DNNs as powerful pattern discovery tools, in contrast to their traditional uses of classification and generation. The project involves evaluating the accuracies of a number of DNN architectures on pre-recorded videos, as well as describe any behavioral patterns found by the DNNs.

Weekly Progress:

- Created synthetic data to train a model to perform binary classification based on linear vs curved path (due to presence of distortion field) of a single bee flight to home.

- Gathered further insight to how data preparation and model training can work for the real dataset.

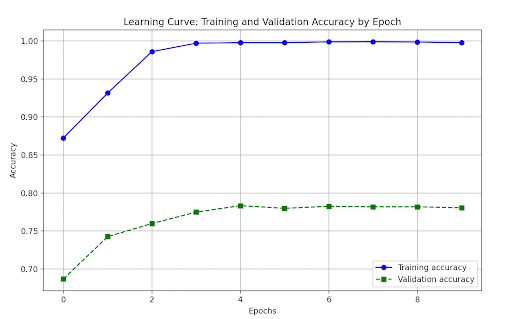

1 frame per sample:

4 frames per sample:

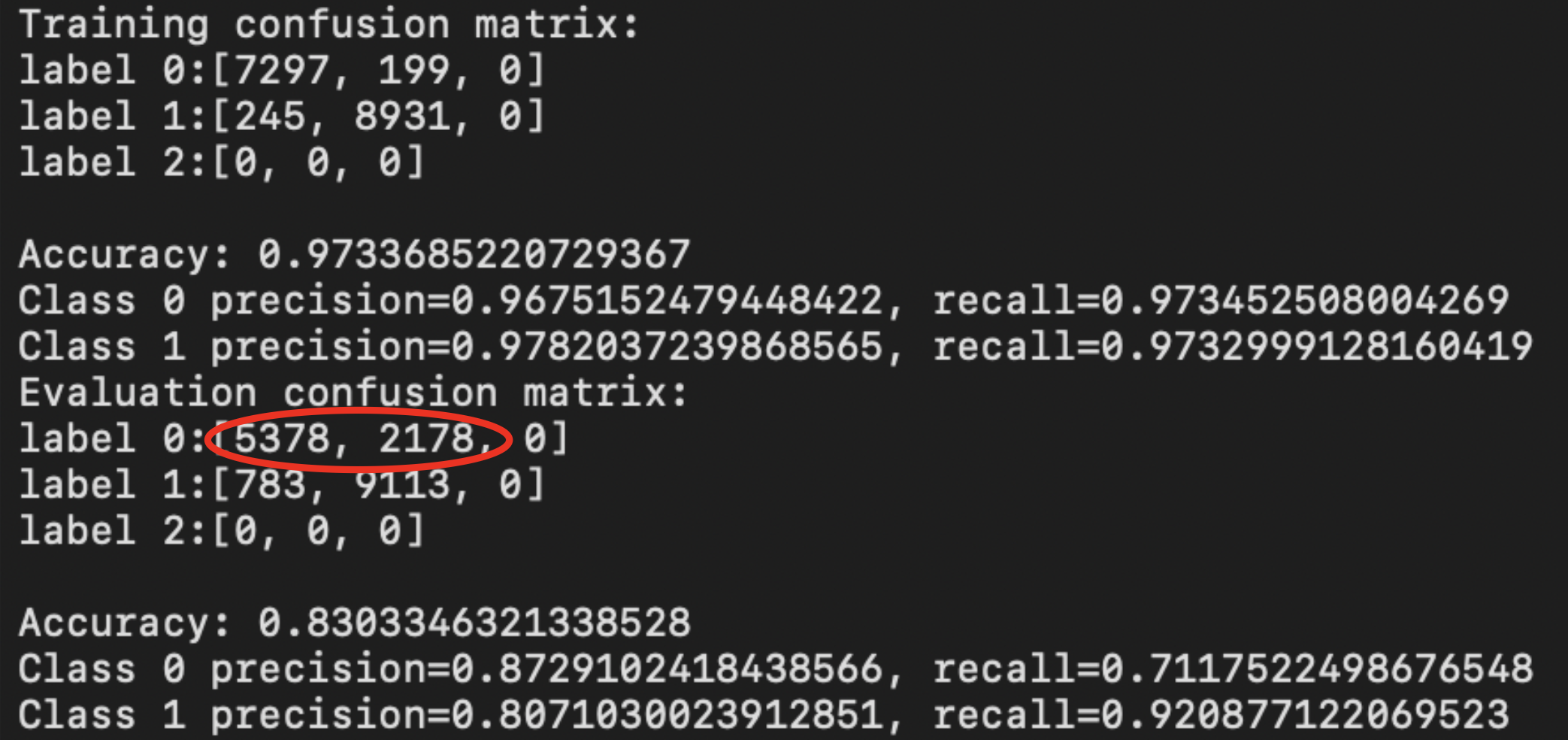

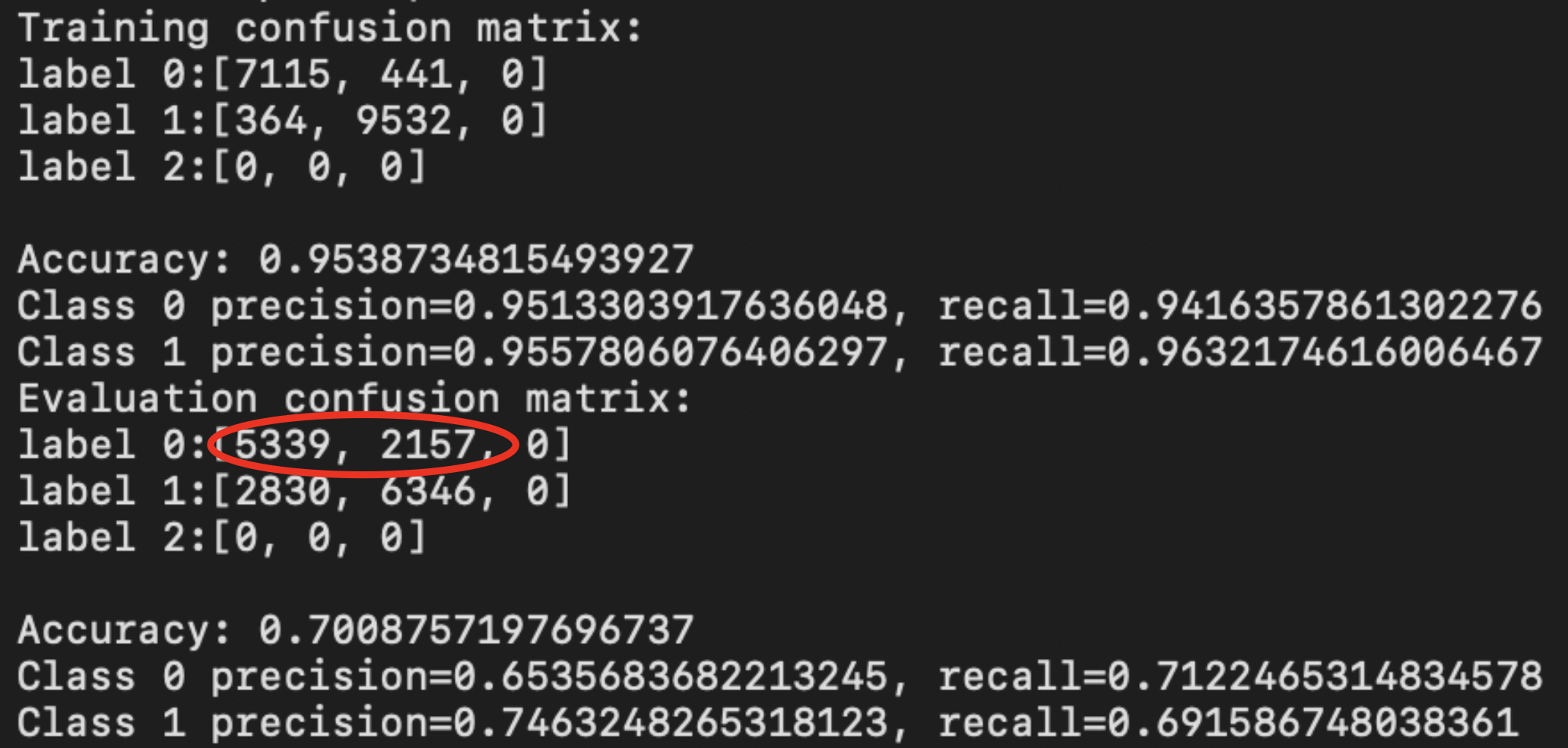

Confusion matrices (bias towards class 1, where field is on); Overfitted (expected because the scenario the data is trying to emulate is oversimplified for the complex model):

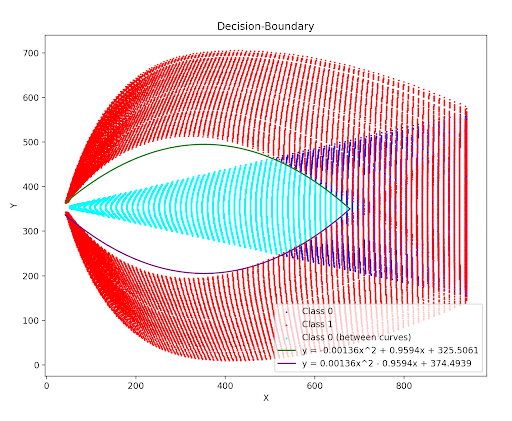

Calculated a hypothetical decision boundary

If our hypothetical recall ~= actual recall ⇒ hypothetical decision boundary might actually represent the model's decision boundary

Class 0 hypothetical recall (between curves / total actual class 0): 0.6070519810977826

Actual Class 0 Recall (tested among 500 samples): ~0.896

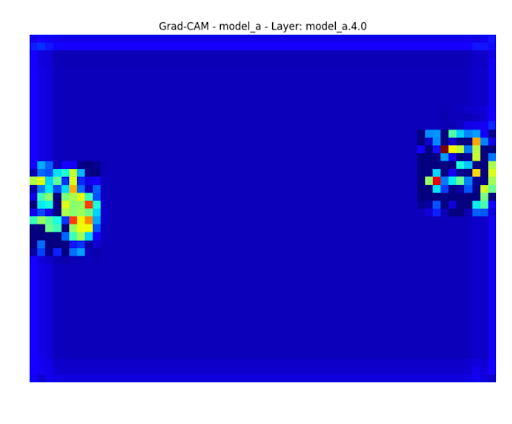

Gathered grad-cam heat-maps

→ Gave us insight and confirmation that the home and bee were being used as features

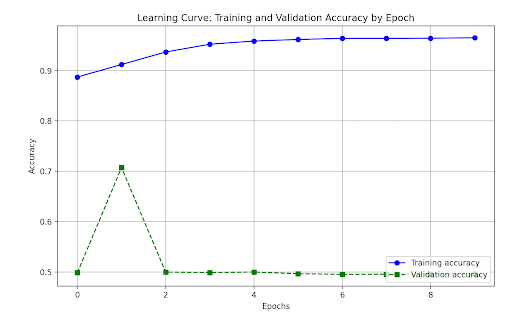

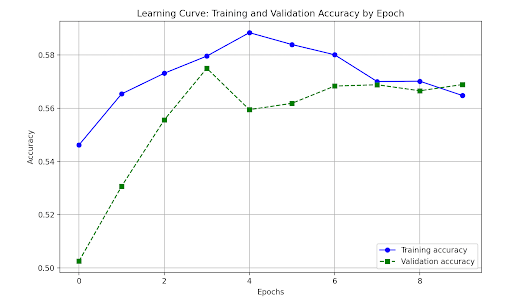

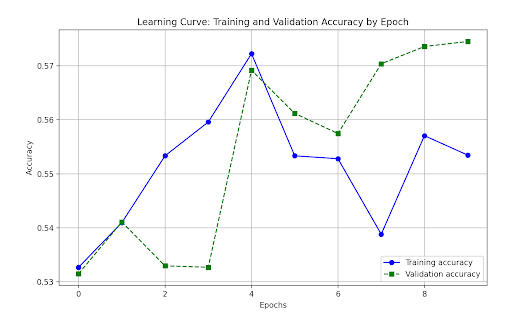

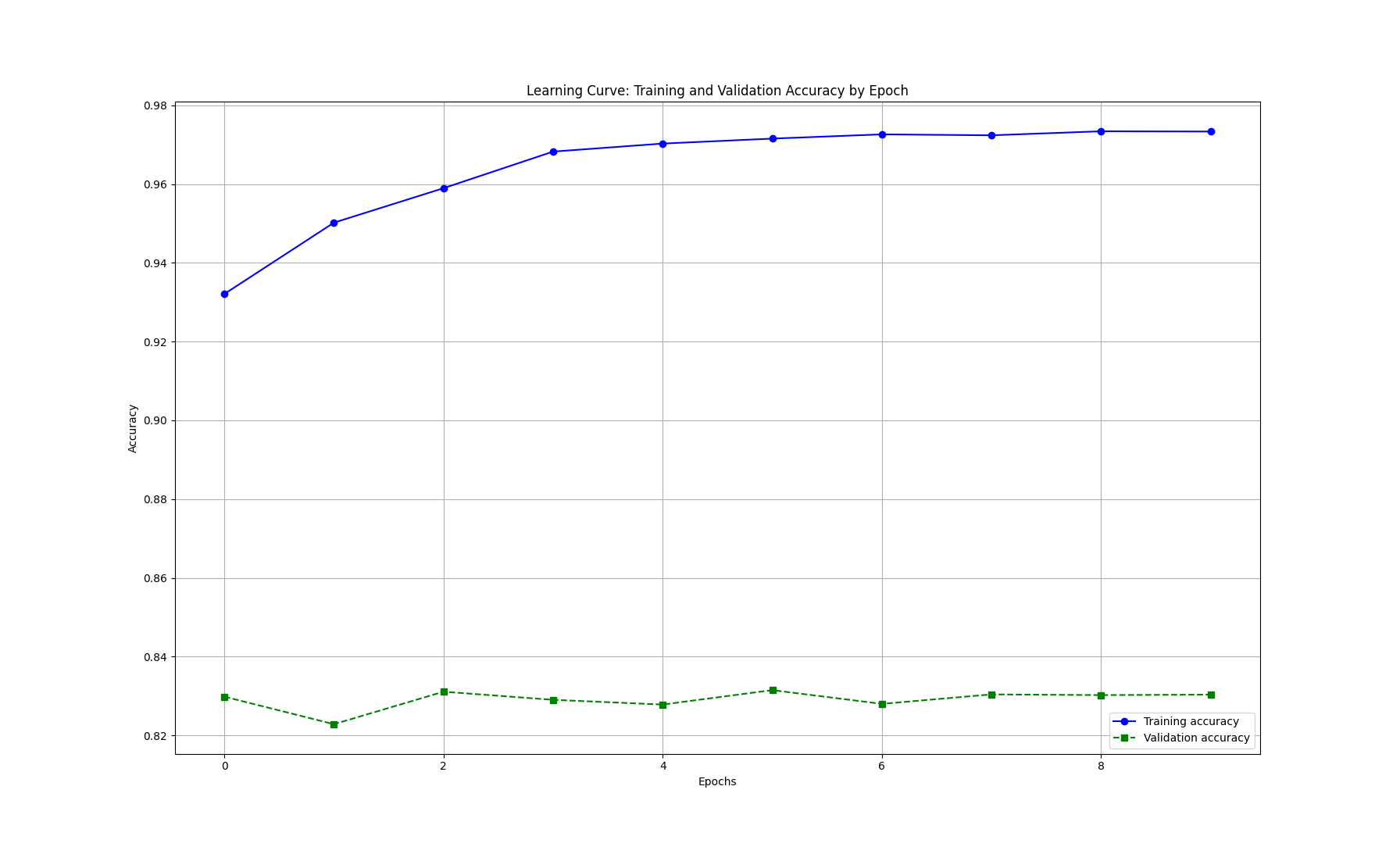

Week 4's dataset results (left - 1 frame/sample, right - 4 frames/sample)

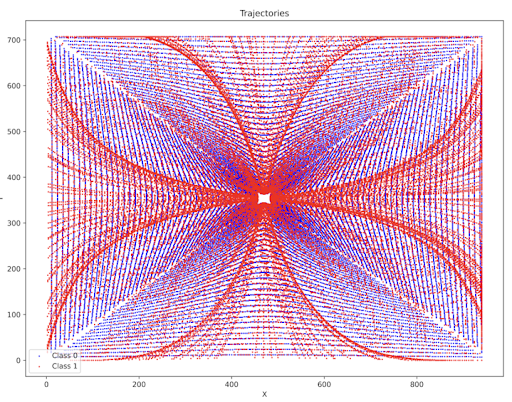

First iteration of radial dataset - Radial entries, 200 entries per side, normalized vectors, fixed center home, fixed field magnitude, 4 possible field directions → significantly improved location distribution of both classes

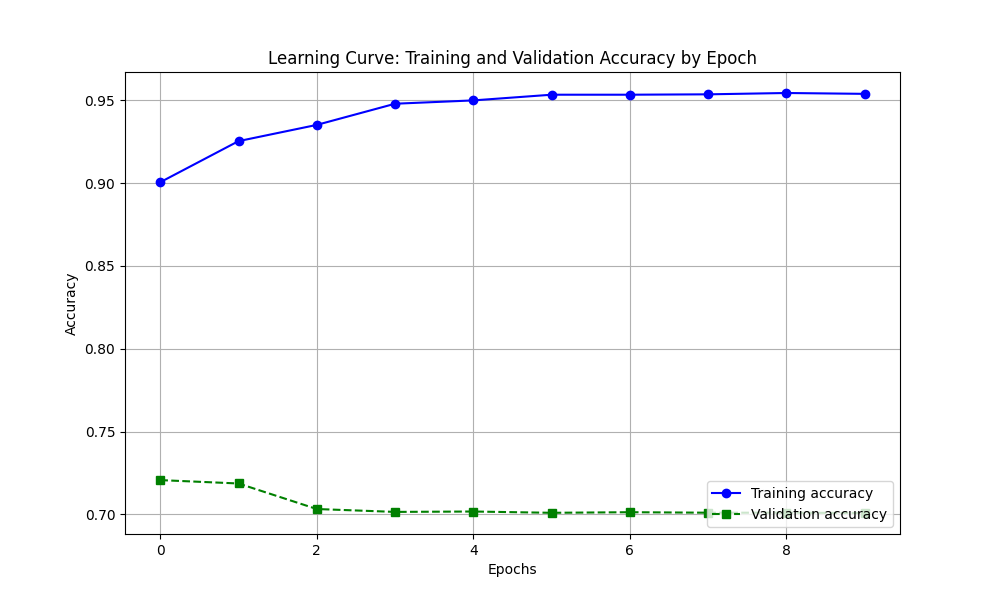

First radial iteration's training results (left - 1 frame/sample, right - 4 frames/sample)

Grad-CAM plot for one of the layers- model seems to be focused at background instead of bee

→ Similar result accuracies between 1 and 4 frames/sample could suggest that the model is not learning motion/sequence of frames

Attachments (38)

-

Untitled drawing.png

(57.5 KB

) - added by 20 months ago.

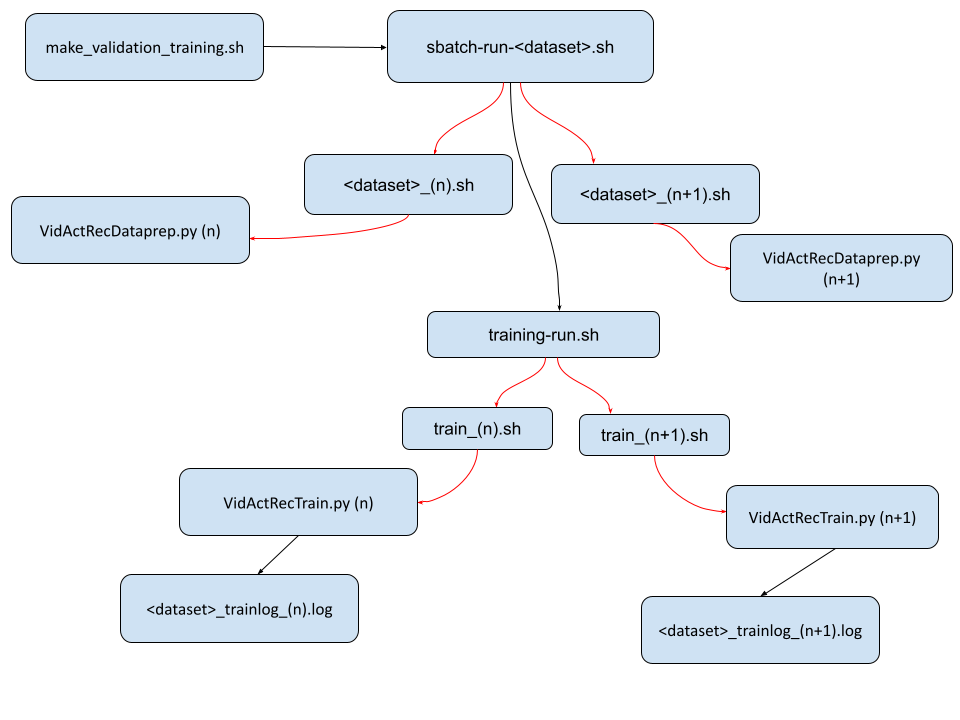

software_pipeline

-

dummy.0.0.gif

(89.4 KB

) - added by 20 months ago.

no_distortion_field

-

dummy.8.0.gif

(118.6 KB

) - added by 20 months ago.

yes_distortion_field

-

Figure_1.png

(55.7 KB

) - added by 20 months ago.

a_fold_1

-

Figure_2.png

(35.8 KB

) - added by 20 months ago.

a_fold_2

-

Figure_1 (1).png

(39.7 KB

) - added by 20 months ago.

b_fold_1

-

Figure_2 (1).png

(35.6 KB

) - added by 20 months ago.

b_fold_2

-

Screenshot 2024-06-13 at 2.18.28 PM.png

(1.7 MB

) - added by 20 months ago.

confusion_1

-

Screenshot 2024-06-13 at 2.18.47 PM.png

(1.2 MB

) - added by 20 months ago.

confusion_2

-

unnamed.png

(144.5 KB

) - added by 20 months ago.

up-down-trajectories

-

unnamed-2.png

(16.2 KB

) - added by 20 months ago.

gradcam

-

unnamed-3.png

(23.7 KB

) - added by 19 months ago.

week4-1frm-result

-

unnamed-4.png

(25.8 KB

) - added by 19 months ago.

week4-4frm-result

-

unnamed-5.png

(386.5 KB

) - added by 19 months ago.

1st iteration of radial dataset

-

unnamed-6.png

(25.3 KB

) - added by 19 months ago.

1st radial 1 frm

-

unnamed-7.png

(28.8 KB

) - added by 19 months ago.

1st radial 4 frm

-

unnamed-8.png

(15.4 KB

) - added by 19 months ago.

1st radial - gradcam

-

unnamed-9.png

(1.9 KB

) - added by 19 months ago.

week6 blank frame

-

Screenshot 2024-07-22 at 11.34.16 AM.png

(25.9 KB

) - added by 19 months ago.

week 6 contiguous frames

-

2024-01-01070811.9.0-ezgif.com-video-to-gif-converter.gif

(284.7 KB

) - added by 19 months ago.

radial simulation sped-up gif

-

unnamed-10.png

(272.6 KB

) - added by 19 months ago.

radial 2nd iteration

-

unnamed-11.png

(29.8 KB

) - added by 19 months ago.

radial 2nd 1 frame result

-

unnamed-12.png

(25.7 KB

) - added by 19 months ago.

radial 2nd 7 frm result

-

unnamed.jpg

(77.7 KB

) - added by 19 months ago.

setup1

-

unnamed-2.jpg

(82.7 KB

) - added by 19 months ago.

setup2

-

unnamed-13.png

(55.5 KB

) - added by 19 months ago.

ants

-

Screenshot 2024-07-22 at 12.12.52 PM.png

(2.1 MB

) - added by 19 months ago.

3rd iteration radial trajectory

-

unnamed-14.png

(22.4 KB

) - added by 19 months ago.

week 8 alexnet result

-

unnamed-15.png

(25.8 KB

) - added by 19 months ago.

week 8 resnet result

-

unnamed-16.png

(10.5 KB

) - added by 19 months ago.

worst classification frames

-

no-background-subtract-ezgif.com-video-to-gif-converter.gif

(24.9 MB

) - added by 19 months ago.

bee gif

-

background-subtract-ezgif.com-video-to-gif-converter.gif

(1.3 MB

) - added by 19 months ago.

bee bg gif

-

Screenshot 2024-07-29 at 2.48.26 PM.png

(489.0 KB

) - added by 19 months ago.

ant grad-cam

-

Screenshot 2024-07-29 at 2.49.53 PM.png

(586.6 KB

) - added by 19 months ago.

ant setup

-

unnamed-17.png

(18.2 KB

) - added by 19 months ago.

varying-speed results

-

unnamed-19.png

(220.4 KB

) - added by 19 months ago.

decision tree

-

unnamed-18.png

(327.8 KB

) - added by 19 months ago.

adjacent map single point

-

unnamed-20.png

(23.2 KB

) - added by 19 months ago.

single point results

.png)

.png)