| Version 37 (modified by , 20 months ago) ( diff ) |

|---|

Self-Driving Vehicular Project

Team: Aaron Cruz [UG], Arya Shetty [UG], Brandon Cheng [UG], Tommy Chu [UG], Vineal Sunkara [UG], Erik Nießen [HS], Siddarth Malhotra [HS]

Advisors: Ivan Seskar and Jennifer Shane

Project Description & Goals:

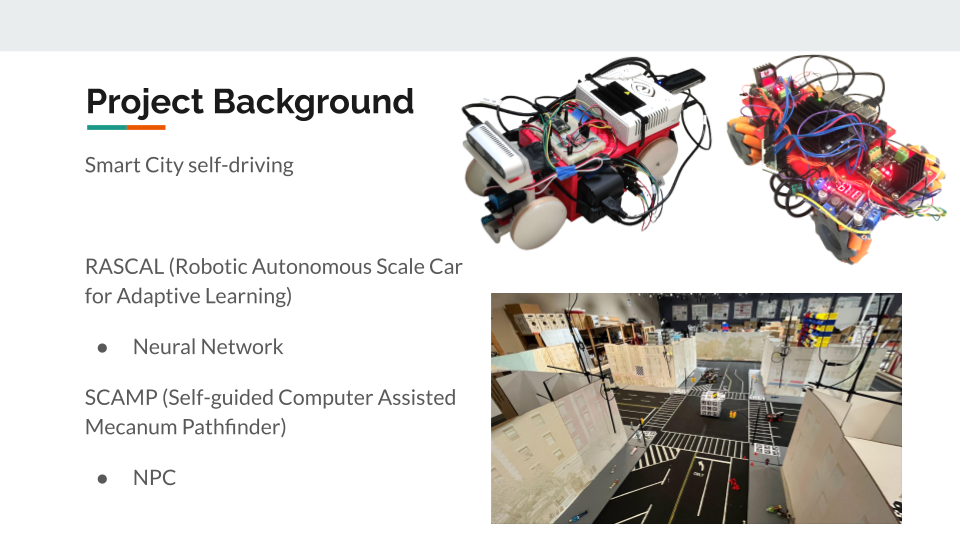

Build and train miniature autonomous cars to drive in a miniature city.

RASCAL (Robotic Autonomous Scale Car for Adaptive Learning): Using the car sensors, offload image and control data onto a server node. This node will use a neural network that will train the vehicle to move around on its own given the image data it sees through its camera.

Technologies: ROS (Robot Operating System), Pytorch

Week 1:

Progress:

- Familiarize with past summer's work:GitLab, RASCAL setup, Software Architecture

- Debug issue with RASCAL's pure pursuit

Week 2:

Progress:

- Setup X11 forwarding for GUI applications through SSH

- Visual odometry using Realsense Camera and rtabmap

- Streamline data pipeline that processes bag data (car camera + control data) into .mp4 video

- Detect ARUCO markers from a given image using Python & OpenCV libraries

- Setup Intersection server (node with GPU)

- Develop PyTorch MNIST model

- Trained "yellow thing" neural network

- Line up perspective drawing with camera to determine FOV

Week 3:

Progress:

- Created web display assassin to eliminate web server when closing ROS

- Tested "yellow thing" model, great results

- SSHFS setup

- Calibrate Realsense camera

- Created "snap picture" button on web display for convenience

- Developed python script to detect ARUCO marker and estimate camera position

- Tested point cloud mapping with rtabmap

- Attempt sensor fusion with encoder odometry and visual odometry

- Data augmentation to artificially generate new camera perspectives from existing images

Week 4:

Progress:

- Refined aruco marker detection for more accurate car pose estimation

- Trained model with video instead of images

- Improved data pipeline from car sensors to server

- Refined data augmentation to simulate new camera perspectives

- Added more data visualization (Replayer) to display steering curve, path, and images to web server

Week 5:

Progress:

- Aruco Marker Detection now updates car position within XY plane. Finished self-calibration system

- Addressed normalization and cropping problems

- Introduced Grad-CAM heat map

- Resolved Python version mismatch issue

- Visualized training data bias through histogram

- Smoothed data to reduce inconsistency in training data

- Simulation Camera - skews closest image to simulate new view

- Added web display improvements - search commands, controller keybinds

Week 6:

[Week 6 Slides]

Progress:

Week 7:

[Week 7 Slides]

Progress:

Week 8:

[Week 8 Slides]

Progress:

Week 9:

[Week 9 Slides]

Progress:

Week 10:

[Week 10 Slides]

Progress:

Additional Resources:

Attachments (5)

- Detected.png (361.9 KB ) - added by 20 months ago.

- gitlab.png (134.3 KB ) - added by 20 months ago.

- SDC Week 2.png (406.3 KB ) - added by 20 months ago.

-

SDC 2024 WINLAB Poster.png

(2.1 MB

) - added by 19 months ago.

Poster

- SDC Open House 2024 .png (405.3 KB ) - added by 19 months ago.